A website is the foundation of every online and local business. It serves as the central point for users to interact with your brand, goods, and services. Thus, maintaining your website’s performance, availability, and security is critical.

But how can you maintain a close check on all of these things without being overwhelmed?

The answer is in using proper website monitoring tools. In 2024, the landscape of such tools is extensive and diverse, meeting a wide range of requirements and budgets. This thorough guide attempts to highlight your route to picking the ideal tool, whether you’re a tiny startup or a large organization.

What is Website Monitoring?

So, first let us look into what Website Monitoring is. Website Monitoring is the process of testing and verifying that end users may interact with a website or online service as intended. It requires checking and monitoring the website’s performance, availability, functionality, and security to guarantee optimal functioning and user satisfaction. This ongoing monitoring helps identify issues such as outages, slow page load times, broken links, and security breaches before they have a major effect on users or the business.

Website monitoring is being broken down into two categories. Synthetic Monitoring and Real User Monitoring (RUM) are two important methods for online performance and availability monitoring. Both provide distinct insights and value, but in different ways. Understanding these distinctions is critical to choose the best monitoring method for your needs.

Synthetic Monitoring

Synthetic monitoring, also known as proactive or artificial monitoring, is the process of mimicking user interactions with a website or application using automated scripts. This approach enables you to test web performance and availability in a controlled environment, without requiring actual user traffic. It’s similar to sending a robot to a business to make sure it’s open and running properly before customers come. In our earlier article we have covered all questions about Synthetic Monitoring. Here we will take a fast look into it again.

Key Features:

- Predefined Actions: Tests are based on scripted interactions predefined by the user, such as logging in, navigating through pages, or completing a transaction.

- Global Perspective: You can run these tests from multiple geographic locations to measure how performance varies across different regions.

- 24/7 Monitoring: Because it doesn’t rely on real user traffic, synthetic monitoring can operate around the clock, identifying issues during off-peak hours.

- Consistency: Tests are repeatable and consistent, providing a baseline for performance benchmarks and comparisons over time.

Benefits:

- Early Problem Detection: Synthetic monitoring can identify issues before they impact real users, allowing for proactive troubleshooting.

- Performance Benchmarking: It offers a consistent baseline for tracking performance improvements or degradations over time.

- Global Availability Checks: You can ensure that your website or application is accessible and performs well from different locations worldwide.

Real User Monitoring (RUM)

Real User Monitoring records and analyzes real-time interactions between users and a website or application. It captures information on how real people interact with the website, such as page load times, transaction pathways, and user behavior patterns. Consider having observers in the store to monitor how customers navigate and experience the purchasing process.

Key Features:

- Real Traffic: RUM relies on actual user interactions, providing insights into real-world performance and user experience.

- Diverse Data: It captures a wide range of metrics, including device type, browser, network conditions, and geographical location of users.

- User Behavior Insights: RUM can offer insights into how user behavior impacts performance, such as which pages are most visited or where users face issues.

Benefits:

- User-Centric Insights: RUM provides a direct look into how real users experience your site, which is crucial for optimizing user satisfaction and engagement.

- Issue Identification: It helps identify specific problems encountered by real users, which might not be covered by synthetic monitoring scripts.

- Performance Optimization: By understanding real user experiences, you can prioritize optimizations that will have the most significant impact on your audience.

Comparing Synthetic Monitoring and RUM

While both monitoring techniques are valuable, they serve different purposes:

- Synthetic Monitoring is best suited for baseline performance testing, availability checks, and identifying issues before they affect users. It’s a controlled approach that allows for consistent testing across various conditions.

- Real User Monitoring shines in providing insights into actual user experiences, uncovering real-world issues, and optimizing for real user conditions. It’s dynamic and directly reflects the diversity of an actual user base.

Why Website Monitoring is Non-negotiable

Imagine your website as an active store. What if the doors randomly closed during the day, or the inside was so disorganized that consumers were unable to navigate it? This is what happens when your website is down, slow, or hacked. Website monitoring tools serve as your digital stewards, keeping the doors open, the lights on, and the shelves organized. However, these tools do more than just avoid problems; they also provide insights into user experience, allowing for adjustments that may greatly improve your site’s speed and, by implication, your business’s profitability.

- Minimizes Downtime –Downtime may be extremely costly, not just in terms of missed sales or money, but also in terms of consumer trust and brand reputation. Monitoring notifies you to downtime issues as they occur, allowing you to resolve them quickly and with minimal effect on your users and company.

- Improves Website Performance –Speed and efficiency are critical for keeping consumers interested. Slow-loading pages can annoy and drive visitors away. Regular monitoring provides performance bottlenecks, allowing you to minimize load times and keep your site running quickly and efficiently.

- Ensures Functionality of Website Features –Shopping carts, forms, and third-party services are all common features and integrations used on websites. Monitoring ensures that all of these components perform as expected, allowing people to engage with your site without experiencing broken features or issues.

- Detects Security Threats –With cybersecurity risks on the rise, monitoring your website for unexpected behavior can serve as a first line of protection against assaults. Prompt detection enables you to correct vulnerabilities and preserve sensitive data, preserving your consumers’ confidence while adhering to data protection rules. SSL Health Checks are one of the most important tests performed here.

Top 8 Website Monitoring Tools of 2024 (Free and Paid)

Let’s look at the best website monitoring solutions available, including both free and paid choices that match all budgets and needs.

1. Xitoring (Paid + FREE)

Perfect for startups, small, and medium businesses, Xitoring provides essential monitoring features without breaking the bank. Its uptime checks and alerting features ensure you’re always in the loop about your website’s status. Besides of Uptime Monitoring, it also provides Linux & Windows server monitoring

Features:

- Real-time performance monitoring

- 20 monitors with 1-minute checks in the free plan

- Advanced SSL monitoring included with SSL health checks

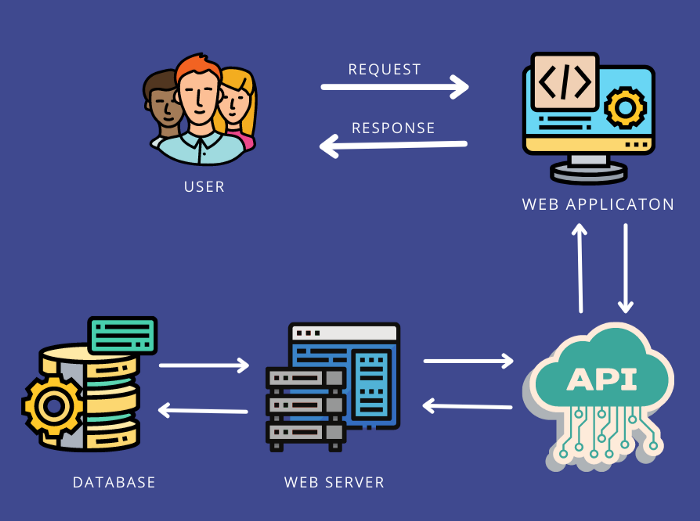

- API Monitoring, which allows you to keep an eye on your api with third-party integrations

- Over 15 probing nodes for the monitoring your website

- Alerting via email, SMS, WhatsApp, and various notification channels

- Maintenance windows to pause monitoring during planned downtime

- Customizeable Public Status Page

- Customizable dashboards and reporting

Benefits: Xitoring’s simple setup and operation make it a popular choice among startups, small and medium enterprises. The tool’s free tier provides critical monitoring functions, making it accessible to businesses with low resources. Its integrated Linux and Windows monitoring agents enable all monitoring requirements to be met in one location. You can monitor server software such as Apache, Nginx, MySQL, Docker, and many others, all of which are required for websites to function.

2. Pingdom ( Paid )

Pingdom, a website monitoring tool, provides a package of strong capabilities like as real-time monitoring, performance analysis, and uptime tracking. It’s the go-to solution for organizations that need precise data to improve customer experiences across the board.

Features:

- Real-time performance monitoring

- Uptime and response time tracking

- Page speed analysis tools

- Transaction monitoring for e-commerce and sign-up processes

- Alerting via email, SMS, and integrations with apps like Slack

Benefits: Pingdom offers intuitive dashboards that make it easy to understand complex data at a glance. Its robust reporting capabilities allow for historical performance analysis, helping to identify trends and potential issues.

3. Site24x7 ( Paid )

Site24x7 is a solution designed for enterprises with complicated demands, providing complete monitoring for websites, servers, and cloud services, as well as AI-powered analytics.

Features:

- Website, server, and network monitoring from over 60 locations worldwide

- Application performance monitoring (APM)

- Cloud services monitoring

- Real user monitoring (RUM)

- Log management and AI-powered analytics

Benefits: Site24x7 provides a comprehensive suite of monitoring tools, making it an all-in-one solution for larger organizations. Its global monitoring capabilities ensure that you can track your site’s performance from your users’ locations.

4. Datadog ( Paid )

Datadog offers comprehensive analytics, real-time performance tracking, and broad integration possibilities, making it suitable for precise analysis and customization.

Features:

- Real-time performance metrics

- Advanced analytics and dashboarding

- Integration with over 400 services

- Log management and APM

- Synthetic monitoring to test website and API endpoints

Benefits: Datadog excels in customization and depth of analysis, offering granular insights into website and application performance. Its integrations make it a powerful tool for teams using a variety of cloud services and technologies.

5. New Relic ( Paid )

New Relic, which focuses on application performance, is the ideal alternative for enterprises looking to optimize their online applications with real-user data and application performance monitoring.

Features:

- Application performance monitoring

- Real user monitoring

- Synthetic transactions to simulate user interactions

- Serverless function monitoring

- Infrastructure monitoring

Benefits: New Relic focuses on application performance, providing detailed insights that help developers optimize their code and infrastructure. Its scalability makes it suitable for businesses of all sizes.

8. Uptrends ( Paid )

Uptrends’ worldwide monitoring network sets it apart, making it the ideal solution for businesses that want thorough performance data from around the world.

Features:

- Uptime, transaction, and server monitoring

- Real user monitoring (RUM)

- Global checkpoint network

- Customizable dashboards and reporting

- API monitoring

Benefits: Uptrends provides detailed insights into website performance from a global perspective, making it easy to pinpoint issues affecting users in specific regions.

Choosing the Right Tool for Your Needs

Choosing the best website monitoring solution requires an in-depth understanding of your targets and limits. Consider the budget, desired features (e.g., real-time alerts, performance benchmarks, worldwide monitoring), ease of use, integration possibilities, and support level. Balance these factors against the expense to ensure you get value for your investment. Remember that the goal is to increase your website’s performance and dependability, which directly contributes to a better user experience and commercial success.